In terms of communication and network technologies, this research center aims to achieve challenging communication network specifications in latency, reliability, and number of connections for perceptual networking.

The focus is on the research and development of key technologies such as low-latency and low-information-age communication, semantic communication, IoT platforms, and management.

By integrating artificial intelligence technologies, the research moves beyond traditional coding and transmission technologies.

Among these, the development of highly efficient semantic communication technologies from the sensing end to the generation end is a forward-looking research goal.

Based on the intelligent computing characteristics of the sensing end and the generation end, this aims to achieve several orders of magnitude reduction in data volume and to meet the low-latency requirements needed for applications through the control of information age.

Low Latency and Low Age Communication: Designing low-latency decoders is a primary focus of our research under the low-latency communication requirement. Polarization codes, known for approaching channel capacity, have been adopted by fifth-generation mobile communication systems. Belief propagation decoders, capable of parallel implementation, better meet low-latency requirements than other decoders. However, their performance still lags behind successive cancellation list (SCL) decoding. To address this, we developed an enhanced SCL decoding method based on the newly proposed sparse even-column matrix, achieving performance close to SCL decoding with the most approximate lower bound. Additionally, we proposed an analysis tool and a polarization code construction method for STR decoding. Computer simulations verified that the codes constructed by our Center outperform both the polarization codes used in fifth-generation mobile communication systems and those designed for sequential elimination decoding. The research results were published in Proceedings of IEEE International Symposium on Information Theory, and Proceedings of Symposium on Information Theory.

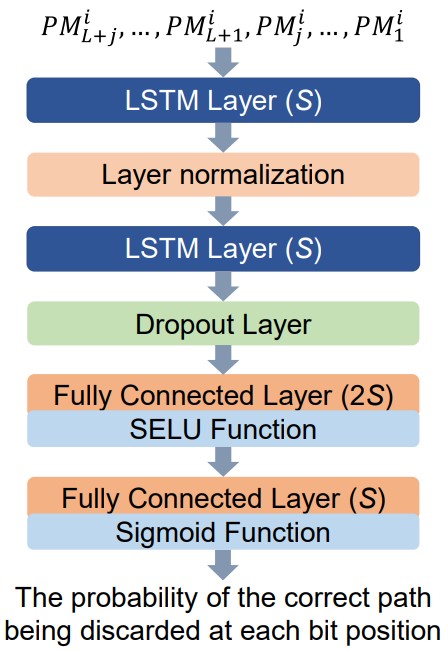

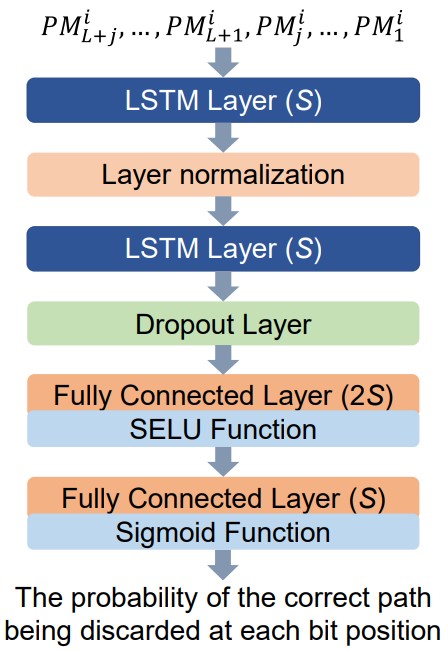

Simulation results demonstrate that the DL-Aided SCLF decoding algorithm, based on the proposed stacked LSTM flip-1 model, stacked LSTM flip-2 model, and stacked LSTM CFC model, provides superior performance with a lower average number of decoding attempts compared to other state-of-the-art decoding algorithms. Notably, these models are trained before decoding, so the primary increase in complexity occurs in the offline phase. The research results were published in IEEE Transactions on Cognitive Communications and Networking (Early Access).

Figure B-15, Flip position prediction structure based on stacked LSTM models

Recently, the rapid development in artificial intelligence, especially the emergence of large-scale language models (LLMs) like OpenAI's ChatGPT and Google's Gemini, has made it possible to efficiently compress complex data, including articles and video images. These breakthroughs in AI technology have been dubbed "the emergence phenomenon of capabilities" and are attributed to two key factors: the unusually high number of parameters in the neural networks (about 175 billion) and the extensive and massive data sets (covering the entire internet).

To provide a theoretical basis for the development of semantic communication technologies and further understand the emergence phenomenon, we attempt to create mathematical models to explain this phenomenon. We have pre-published our results on a public online platform arXiv.

Figure B-16: Intermediate Relay System for Maintaining Information Freshness

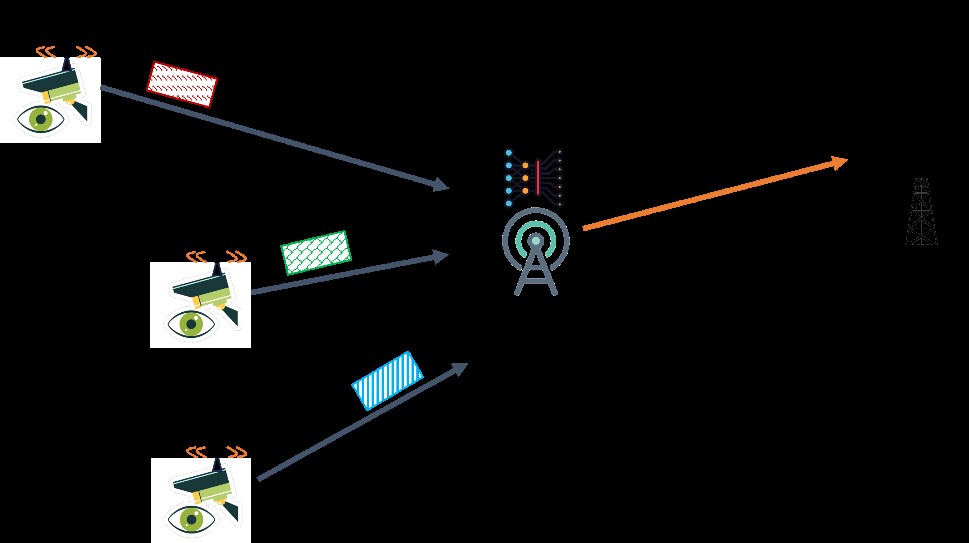

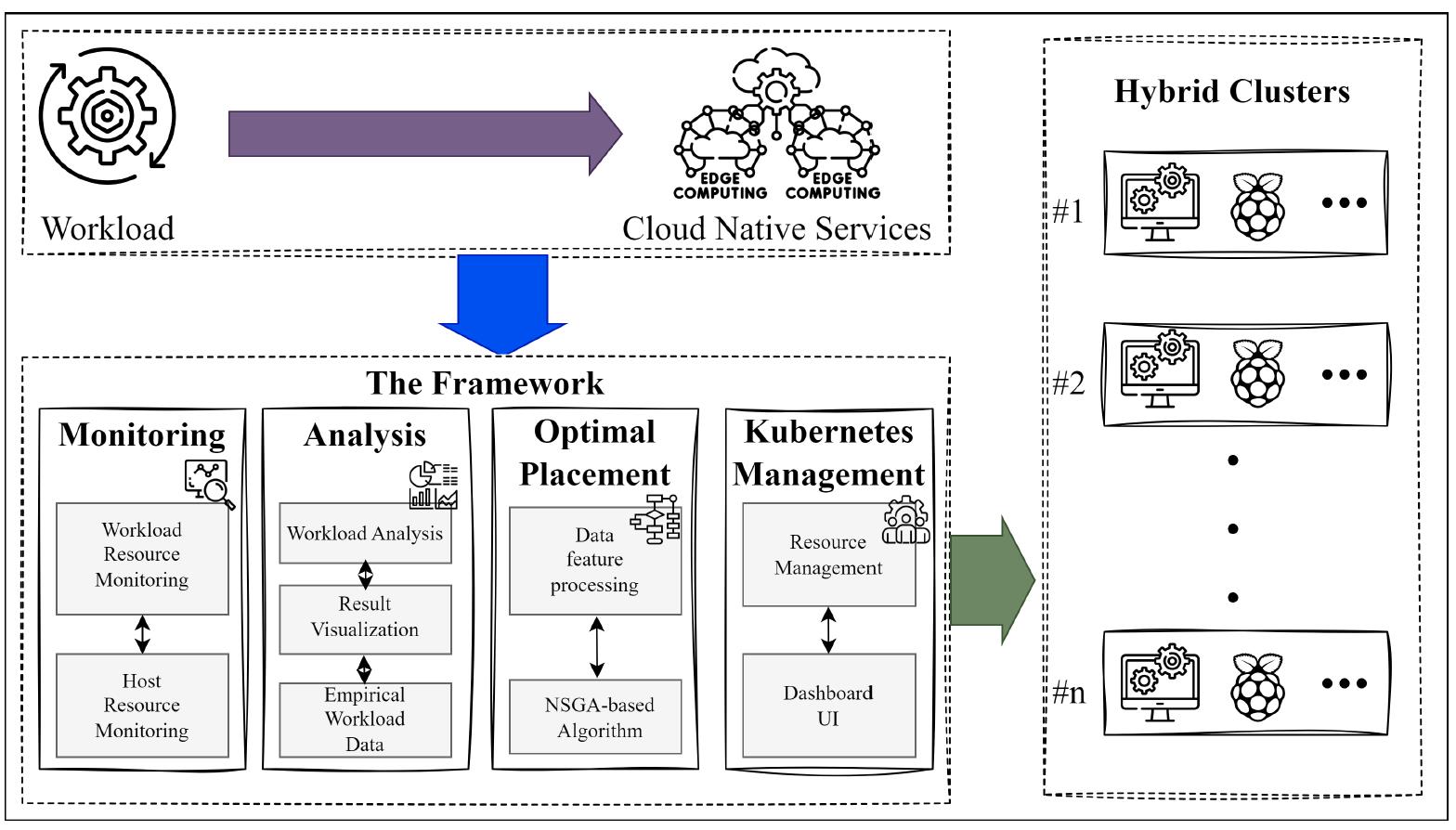

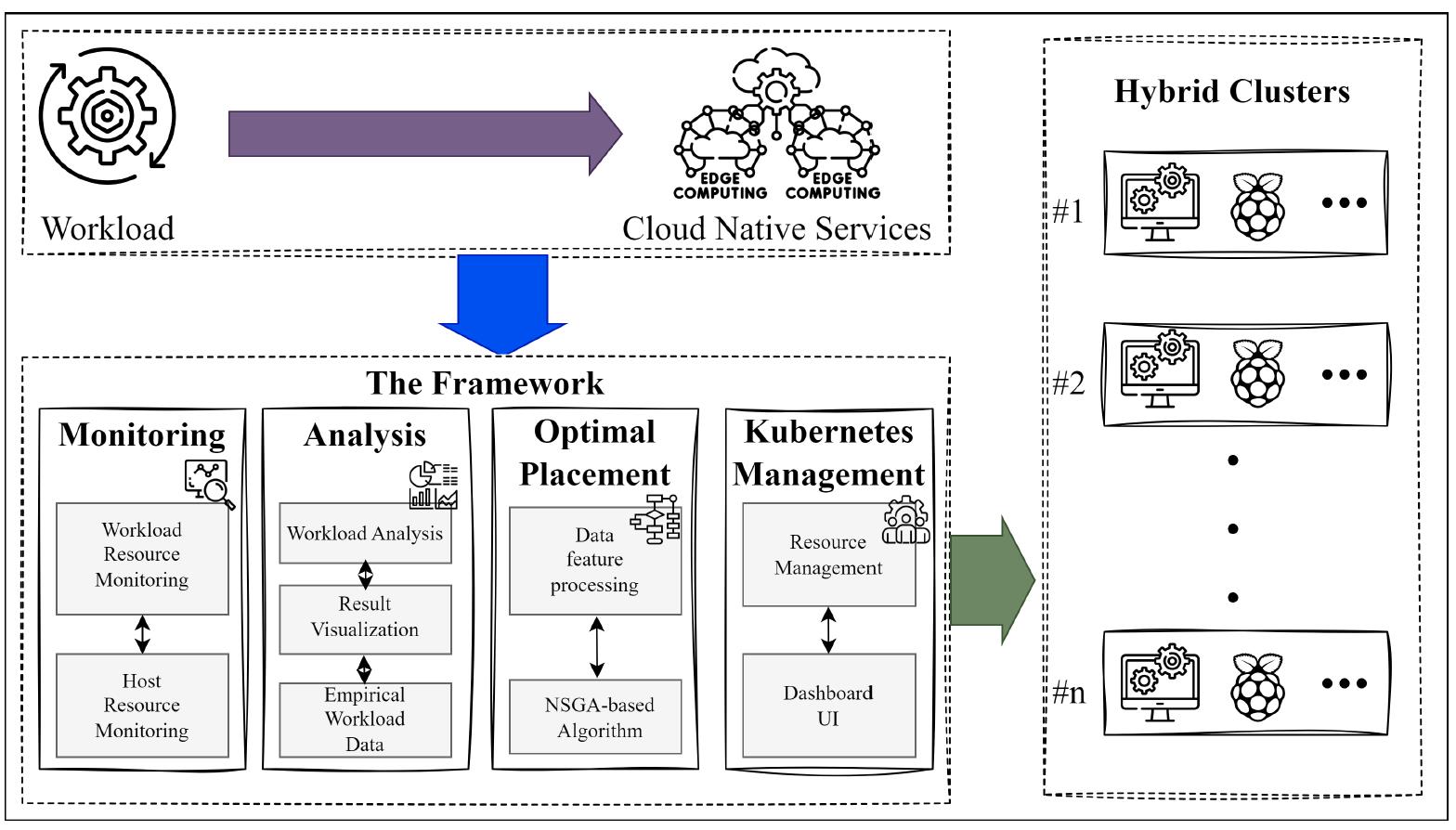

Managing Internet of Things (IoT) resources in cloud networks is highly complex due to the diverse requirements of various application services. This year, our focus was on optimizing microservice management and resource allocation in heterogeneous cloud environments for IoT applications. To tackle this challenge, we introduced an advanced management framework called the Multi-Objective Microservice Allocation Algorithm (MOMA). MOMA addresses two crucial factors in microservice resource allocation: optimizing resource utilization and minimizing network communication costs. By transforming these objectives into a constrained optimization problem, our framework facilitates efficient resource management across diverse cloud systems. This approach streamlines cloud service deployment, simplifies workload monitoring, and enhances analytical capabilities. We conducted a comprehensive evaluation of MOMA against existing algorithms using real-world datasets. The experimental results demonstrate that MOMA significantly improves resource utilization, reduces network transmission costs, and enhances network reliability. Detailed findings will be published in IEEE Access (under review).

Figure B-18: Heterogeneous Cloud Microservice Management Framework Architecture

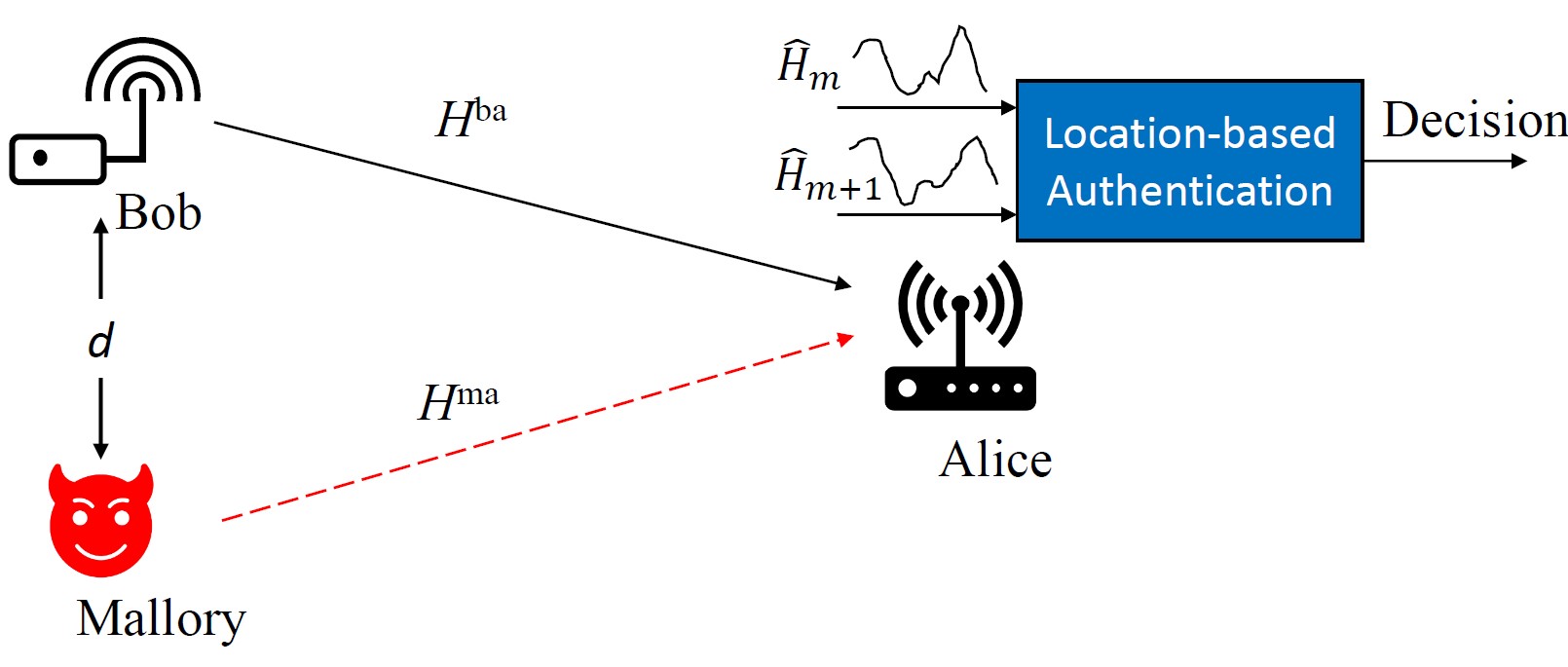

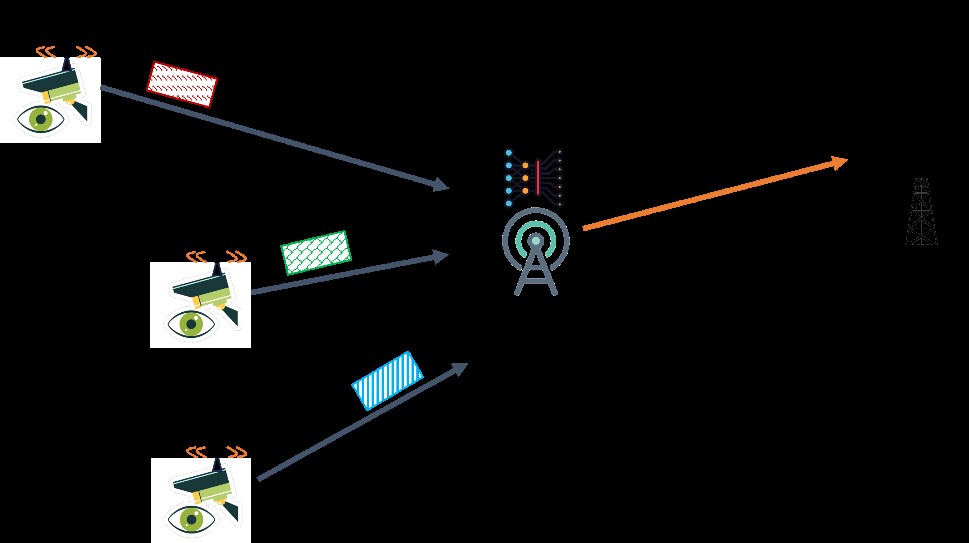

The CNN outputs a score that quantifies differences between channel states observed at different times, enabling device identity verification. Our experimental platform, based on WiFi, facilitated a comprehensive evaluation of this technique. We studied the impact of distance between legitimate and malicious devices on authentication performance and assessed the CNN model's generalization across various test scenarios. The results highlight the effectiveness of our CNN-based authentication approach compared to traditional correlation-based methods. Detailed findings from our research were published in Proceedings of IEEE Global Communications Conference (Globecom).

Low Latency and Low Age Communication: Designing low-latency decoders is a primary focus of our research under the low-latency communication requirement. Polarization codes, known for approaching channel capacity, have been adopted by fifth-generation mobile communication systems. Belief propagation decoders, capable of parallel implementation, better meet low-latency requirements than other decoders. However, their performance still lags behind successive cancellation list (SCL) decoding. To address this, we developed an enhanced SCL decoding method based on the newly proposed sparse even-column matrix, achieving performance close to SCL decoding with the most approximate lower bound. Additionally, we proposed an analysis tool and a polarization code construction method for STR decoding. Computer simulations verified that the codes constructed by our Center outperform both the polarization codes used in fifth-generation mobile communication systems and those designed for sequential elimination decoding. The research results were published in Proceedings of IEEE International Symposium on Information Theory, and Proceedings of Symposium on Information Theory.

Simulation results demonstrate that the DL-Aided SCLF decoding algorithm, based on the proposed stacked LSTM flip-1 model, stacked LSTM flip-2 model, and stacked LSTM CFC model, provides superior performance with a lower average number of decoding attempts compared to other state-of-the-art decoding algorithms. Notably, these models are trained before decoding, so the primary increase in complexity occurs in the offline phase. The research results were published in IEEE Transactions on Cognitive Communications and Networking (Early Access).

Figure B-15, Flip position prediction structure based on stacked LSTM models

Recently, the rapid development in artificial intelligence, especially the emergence of large-scale language models (LLMs) like OpenAI's ChatGPT and Google's Gemini, has made it possible to efficiently compress complex data, including articles and video images. These breakthroughs in AI technology have been dubbed "the emergence phenomenon of capabilities" and are attributed to two key factors: the unusually high number of parameters in the neural networks (about 175 billion) and the extensive and massive data sets (covering the entire internet).

To provide a theoretical basis for the development of semantic communication technologies and further understand the emergence phenomenon, we attempt to create mathematical models to explain this phenomenon. We have pre-published our results on a public online platform arXiv.

Figure B-16: Intermediate Relay System for Maintaining Information Freshness

Managing Internet of Things (IoT) resources in cloud networks is highly complex due to the diverse requirements of various application services. This year, our focus was on optimizing microservice management and resource allocation in heterogeneous cloud environments for IoT applications. To tackle this challenge, we introduced an advanced management framework called the Multi-Objective Microservice Allocation Algorithm (MOMA). MOMA addresses two crucial factors in microservice resource allocation: optimizing resource utilization and minimizing network communication costs. By transforming these objectives into a constrained optimization problem, our framework facilitates efficient resource management across diverse cloud systems. This approach streamlines cloud service deployment, simplifies workload monitoring, and enhances analytical capabilities. We conducted a comprehensive evaluation of MOMA against existing algorithms using real-world datasets. The experimental results demonstrate that MOMA significantly improves resource utilization, reduces network transmission costs, and enhances network reliability. Detailed findings will be published in IEEE Access (under review).

Figure B-18: Heterogeneous Cloud Microservice Management Framework Architecture

The CNN outputs a score that quantifies differences between channel states observed at different times, enabling device identity verification. Our experimental platform, based on WiFi, facilitated a comprehensive evaluation of this technique. We studied the impact of distance between legitimate and malicious devices on authentication performance and assessed the CNN model's generalization across various test scenarios. The results highlight the effectiveness of our CNN-based authentication approach compared to traditional correlation-based methods. Detailed findings from our research were published in Proceedings of IEEE Global Communications Conference (Globecom).